“It’s safe to say that the people who volunteered to “shape” the initiative want it dead and buried. Of the 52 responses at the time of writing, all rejected the idea and asked Mozilla to stop shoving AI features into Firefox.”

The more AI is being pushed into my face, the more it pisses me off.

Mozilla could have made an extension and promote it on their extension store. Rather than adding cruft to their browser and turning it on by default.

The list of things to turn off to get a pleasant experience in Firefox is getting longer by the day. Not as bad as chrome, but still.

Oh this triggers me. There have been multiple good suggestions for Firefox in the past that are closed with nofix as “this can be provided by the community as an add-on”. Yet they shove the crappiest crap into the main browser now.

Rather than adding cruft to their browser and turning it on by default.

The second paragraph of the article:

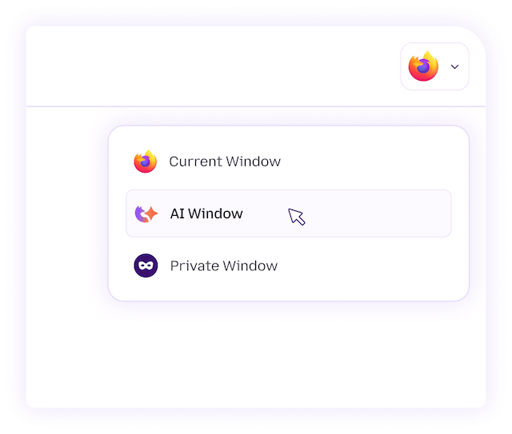

The post stresses the feature will be opt-in and that the user “is in control.”

That being said, I agree with you that they should have made it an extension if they really wanted to make sure the user “is in control.”

Switching to de-Mozilla’d Firefox (Waterfox) is as simple as copying your profile folder from FF to WF. Everything transfers over, and I mean everything. No mozilla corp, no opting out of shit in menus at all.

You want AI in your browser? Just add <your favourite spying ad machine> as a “search engine” option, with a URL like

https://chatgpt.com/?q=%25s, with a shortcut like

@ai. You can then ask it anything right there in your search bar.Maybe also add one with a URL with some query pre-written like

https://chatgpt.com/?q=summarize this page for me: %sas

@aisor something, modern chatbots have the ability to make HTTP requests for you. Then if you want to summarize the page you’re on, you do Ctrl+L Ctrl+C @ais Ctrl+V Enter. There, I solved all your AI needs with 4 shortcuts without literally any client-side code.Emissions. Economic problems. Goading several people into suicide.

Like, if you ship a food item with harmful bacteria in it, it gets recalled. If you have a fatal design flaw in a car, it gets recalled. If your LLM encourages people to separate from their loved ones and kill themselves, nothing fucking happens.

Just render the page, page renderer.

monkey paw: curls

Yes, the page has been rendered by a large webpage model based on the URL.

Those unhappy have another option: use an AI‑free Firefox fork such as LibreWolf, Waterfox, or Zen Browser.

And I have taken that other option.

Also: Vanadium and/or Ironfox on Android.

A fork is great, but the more a fork deviates, the more issues there are likely to be. Firefox is already at low enough numbers that it’s not really sustainable.

Then Mozilla should start listening to their users instead of driving them away. I know I stopped using Firefox after being a regular user since launch because the AI nonsense became the last sta straw.

Yes but we shouldn’t let perfect be the enemy of good.

What do you mean by “we shouldn’t let perfect be the enemy of good”? Why should I use a browser which is actively anti-user when there are better alternatives out there?

The good is other rendering engines currently in the works.

Then Mozilla should start listening to their users instead of driving them away.

I think the hope is to get more people in than losing them. But with Ai nobody will stay forever, because the time someone else makes a better Ai tool, they switch. Because Mozilla loses personality and uniqueness and start getting replaceable. … just like employees who are forced to use Ai instead their own work and knowledge.

My two biggest issues with a fork are: a) timely updates, they take a bit longer than the main version, and b) trust issues, I don’t trust most forks.

Try Phoenix for Firefox https://github.com/celenityy/Phoenix

What I don’t get: Isn’t Vanadium Chromium under the hood?

It is.

My understanding is that you go to Ironfox to optimize for privacy and Vanadium to optimize for security.

It depends on your threat model.

Either way, both are better on both fronts when compared to default Chrome or Firefox.

Yes. Chromium isn’t bad in itself though.

The truth is that Chromium is really good. It has the best security and performance.

Vanadium takes that and makes changes to make it more secure and private.

I think the problem with Chromium isn’t so much that Blink or V8 is bad or anything, it’s that it’s entirely under the thumb of Google. We’re essentially being set up for another Internet Explorer scenario, only Google unlike Microsoft won’t just be sitting on their laurels. Google is an advertising company, their entire business model is the web. Google Search is the tool used to find things, and with Google Chrome being the go-to browser for a lot of people, Google essentially ends up in control of both how you access the web and what you access.

That sort of power is scary, which is why I personally avoid anything Chromium based as much as I am able to. Chromium itself is fantastic, but I don’t like the baggage it comes with.

That’s valid.

That’s also part of the reason I like Webkit. It’s in a nice spot between Firefox and Chromium when it comes to security and performance. And importantly, is not from an ad company and often passes on browser specs that would be harmful to privacy and security.

I forget what the site is called, but I saw one that nicely layed out different browser specs and gives the explanation why one of the engine developers decided against supporting or implementing it.

I just wish there was a good Webkit browser on Linux. Unfortunately, Gnome Web just feels slow and unresponsive despite good benchmarks.

Gods I wish Epiphany/Gnome Web was better. The Kagi people are working on bringing Orion to Linux, which I believe will be using WebKit there as well.

It’s kind of funny that we don’t have a solid WebKit browser on Linux, since WebKit has its roots in the KDE Projects KHTML engine for Konqueror.

I guess that kind of ties in to my anger at these massive tech companies profiting off of FOSS but doing almost fuck-all to contribute. Google opening LLM generated bug reports in FFMPEG when all of the streaming media giants are propped up by this one project is just one example. There should be some kind of tax for this, I feel. They’re benefitting greatly, and provide nothing in return.

Google search hasnt been usable for over a year.

Doesn’t mean that people don’t use it. Lots of people do.

Why not just distribute a separate build and call it “Firefox AI Edition” or something? Making this available in the base binary is a big mistake. At least doing so immediately and without testing the waters.

There is a Firefox Developer’s Edition so I don’t see why not? I personally don’t care to see them waste the time on AI features.

Personally I don’t want AI anywhere.

I can think of some uses

But I’m feeling really sadistic today, and it mostly just boils down to ‘forced ‘paranoia’ larp’.

I think Mozilla’s base is privacy focused individuals, a lot of them appreciating firefox’s opensource nature and the privacy hardened firefox forks. From a PR perspective, Firefox will gain users by adamantly going against AI tech.

It’s interesting that so many of those privacy-focused individuals use Windows and don’t have a single extension installed though.

It depends. If it’s just for the sake of plugging AI because it’s cool and trendy, fuck no.

If it’s to improve privacy, accessibility and minimize our dependency on big tech, then I think it’s a good idea.

A good example of AI in Firefox is the Translate feature (Project Bergamot). It works entirely locally, but relies on trained models to provide translation on-demand, without having Google, etc as the middle-man, and Mozilla has no idea what you translates, just which language model(s) you downloaded.

Another example is local alt-text generation for images, which also requires a trained model. Again, works entirely locally, and provide some accessibility to users with a vision impairment when an image doesn’t provide caption.

Totally agree. Just because generally AI is bad and used in stupid ways, it doesn’t mean that all AI is useless or without meaning. Clearly if you look at the trends, people are using chatbots as search engines. This is not Mozilla forcing anything on us, we are doing this. At that point I much prefer them to develop a system that lets us use gpts to surf the web in the most convenient and private way possible. So far I have been very happy with how Mozilla has implemented AI in Firefox. I don’t feel the bloat, it is not shoved in my face, and it is under my control. We don’t have to make it a witch hunt. Not everything is either horrible or beautiful.

I’ve actually flipped on this position - but before you pull out your pitchforks and torches, please listen to what I have to say.

Do we want mass surveillance through SaaS? No. Do we want mass breach of copyright just because it’s a small holder and not some giant publisher - I.e “rules for thee” type vibe? Hell no. But do we throw the baby out with the bath water? Also: heck no. But let’s me underline a few facts.

- AI currently requires power greedy chips that also don’t utelize memory effectively enough

- Because of this it’s relegated to massive, globe heating infrastructure

- SaaS will always, always track you and harvest your data

- Said data will be used in marketing and psy-ops to manipulate you, your children and your community

- The more they track, the better their models become, which they’ll keep under lock and key

- More and more devices are coming with NPUs and TPUs on-chip

- That is the hardware has not caught up to the software yet

See where I’m going with this?

Add to the fact that people like their chatbots and can even learn to use them responsibly, but as long as they’re feeding the corpos, it’ll be used against them. Not only that, but in true silicon valley fashion, it’ll be monopolized.

The libre movement exists to bring power back to the user by fighting these conditions. It’s also a very good idea to standardize things so that it’s not hidden behind a proprietary API or service.

That’s why if Mozilla seeks to standardize locally run AI models by way of the browser, then that’s a good thing! Again; not if they’re feeding some SaaS.

But it their goal and their implementation is to bring models to the general consumer so that they can seize the means of computing, then that’s a good thing!

Again, if you’d rather just kick up dust and bemoan the idiocy and narcissistic nature of Silicon Valley, then you’ve already given them what they want - that they, and they alone, get to be the sole proprietaries of AI that is standardized. That’s like giving the average user over to a historically predatory ilk who’d rather build an autocracy than actually innovate.

Mozilla can be the hero we need. They can actually focus on consumer hardware, to give people what they want WITHOUT mass tracking and data harvesting.

That is if they want to. I’m not saying they’re not going to bend over, but they need the right kind of push back. They need to be told “local AI only - no SaaS” and then they can focus on creating web standards for local AI, effectively becoming the David to Silicon Valleys Goliath.

I know this is an unpopular opinion and I know the Silicon Valley barons are a bunch of sociopaths with way too much money, but we can’t give them monopoly over this. That would be bad!! We need to give the power to the user, and that means standardization!

Take it from an old curmudgeon. I’ve shook my fist at the cloud, I’ve read a ton of EULAs and I’ve opposed many predatory practices. But we need to understand that the user wants what the user wants. We can’t stick our heads in the sand and just repeat “AI bad” ad nauseum. We need to mobilize against the central giants.

We need a local AI movement and Mozilla could be in the forefront of this, if it weren’t for the pushback and outright cynicism people trevall generally (and justifiably) have - but we can’t let these cretinous bastards hold all the AI cards.

We need libre AI, and we need it now!

Thank you for your consideration.

I agree that: SaaS = UaaP (User as a Product). Most importantly, AI is powerful and here to stay and if it’s completely controlled by the rich and powerful, then the rest of us are majorly screwed.

Small models, local models, models that anybody can deploy and control the way they see fit, PUBLIC models not controlled by the rich and powerful - these will be crucial if we’re going to avoid the worst case situation.

IMHO it’s better to start downloading and playing with local quantized LLMs (i barely know what i’m talking about here, i admit, but bear with me - i’m just trying to add something useful to the discussion), it’s better to start taking hold of the tech and tinkering, like we did with cars when they were new, and planes, and computers, and internet … so that hopefully there will be alternatives to the privately controlled rich-and-powerful-corpo models.

I am not really liking AI , sure its good for somethings but in last 2 weeks i seen some very negative and destructive outcomes from AI . I am so tired of everything being AI . It can have good potential but what are risks to users experience?

What things is it good for?

Genuinely good for?

Edit: being downvoted, but nobody has an answer, do they?

Basically everything its used for that isn’t being shoved in your face 24/7.

- speech to text

- image recognition

- image to text (includes OCR)

- language translation

- text to speech

- protein folding

- lots of other bio/chem problems

Lots of these existed before the AI hype to the point they’re taken for granted, but they are as much AI an LLM or image generator. All the consumer level AI services range from annoying to dangerous.

Is it actually both good and efficient for that crap, though? Or is it just capable of doing it?

Is it efficient at simulating protein folding, or does it constantly hallucinate impossible bullshit that has to be filtered out, burning a mountain and a lake for what a super computer circa 2010 would have just crunched through?

Does the speech to text actually work efficiently? On a variety of accents and voices? Compared to the same resources without the bullshit machine?

I feel like i need to ask all these questions because there are so many cultists out there contriving places to put this shit. I’m not opposed to a huge chunky ‘nuclear option’ for computing existing, I just think we need to actually think before we burn the kinds of resources this shit takes on something my phone could have done in 2017.

All of the AI uses I’ve listed have been around for almost a decade or more and are the only computational solutions to those problems. If you’ve ever used speech to text that wasn’t a speak-n-spell you were using a very basic AI model. If you ever scanned a document and had the text be recognized, that’s an AI model.

The catch here is I’m not talking about chatgpt or anything trying be very “general”. These are all highly specialized ai models that serve a very specific function.

a decade or more

Okay so not this trash that everyone’s going all-in on right now and building extra coal plants to power.

ai can be good as long as you don’t let it think for you. i think the problem is taking resources from development and building into a browser would could just be a bookmark to a webpage.

why don’t they just instead put vivaldi’s web panel sidebar into firefox so you can just add chatgpt or whatever as a web panel. i think that would be infinitely more useful (and can be used for other sites other than ai assistants).

ai can be good as long as you don’t let it think for you

Unfortunately, there’s too many people already doing that, with not so clever results!

If it increases accessibility for those with additional requirements then great but we know that’s not even in its top 10 reasons for being implemented

Don’t they already have that last thing you mentioned?

it’s an addon but not baked in (not to the point like vivaldi where you can add any web panel as a url and have it display in the sidebar)

Doesn’t matter what the end-user wants. Corporate greed feeding into technological ignorance is gonna shove it down our throats anyway

I considered using AI to summarize news articles that don’t seem worth the time to read in full (the attention industrial complex is really complicating my existence). But I turned it off and couldn’t find the button to turn it back on.

If you need to summarize the news, which is already a summary of an event containing the important points and nothing else, then AI is the wrong tool. A better journalist is what you actually need. The whole point of good journalism is that it already did that work for you.

That should be the point but there is barely good journalism left.

How does AI mis-summarizing the (allegedly bad) journalism improve it?

It SLAMS away all the fluff.

I have a real journalist, but this is more on the “did you know this was important” side. Like how it’s fine to rinse your mouth out after brushing your teeth, but if your water isn’t fluoridated then you probably shouldn’t (which I got from skimming the article for the actionable information).

AI is very much not good at summarising news accurately.

https://pivot-to-ai.com/2025/11/05/ai-gets-45-of-news-wrong-but-readers-still-trust-it/

you have to be REALLY careful when asking an LLM to summarize news otherwise it will hallucinate what it believes sounds logical and correct. you have to point it directly to the article, ensure that it reads it, and then summarize. and honestly at that point…you might as well read it yourself.

And this goes beyond just summarizing articles you NEED to provide an LLM a source for just about everything now. Even if you tell it to research online the solution to a problem many times now it’ll search for non-relevant links and utilize that for its solution because, again, to the LLM it makes the most sense when in reality it has nothing to do with your problem.

At this point it’s an absolute waste of time using any LLM because within the last few months all models have noticeably gotten worse. Claude.ai is an absolute waste of time as 8 times out of 10 all solutions are hallucinations and recently GPT5 has started “fluffing” solutions with non-relevant information or it info dumps things that have nothing to do with your original prompt.

I wish it was a clear pption in my browser.

When I want AI, I use this: https://duckduckgo.com/?q=DuckDuckGo+AI+Chat&ia=chat&duckai=1

My worry about AI built into my browser is that it’ll be turned into data mining, training, and revenue generation resulting in exploitation and manipulation of me.

My worry about AI built into my browser is that it’ll be turned into data mining, training, and revenue generation

Isn’t the AI Mozilla is talking about all run locally?

I’ll be honest, I do not know, but I’m always more worried about where it’ll end-up over where it is right now. Even if it is all local for now, it is a small tweak for that to change. Just a small decision by a few people and everything changes. I don’t have enough trust to believe that decision won’t be made.

The post stresses the feature will be opt-in and that the user “is in control.”

Nothingburger