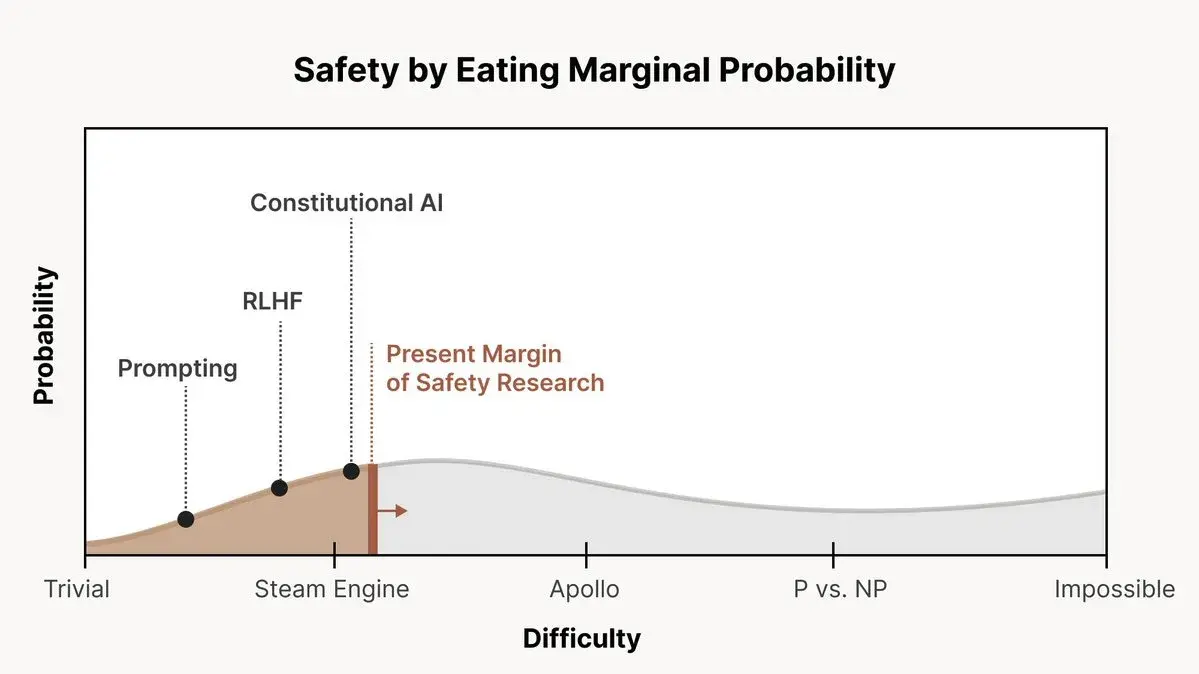

From this post; featuring “probability” with no scale on the y-axis, and “trivial”, “steam engine”, “Apollo”, “P vs. NP” and “Impossible” on the x-axis.

I am reminded of Tom Weller’s world-line diagram from Science Made Stupid.

Everybody add your own data points, mine is Graph Creator Shits Self, just to the right of Steam Engine

the shitting what

it’s like someone saw the charts of the kind that get pitched to defense departments, and thought that’s how they should be made

opening the link and seeing

Crossposted from the AI Alignment Forum. May contain more technical jargon than usual.

followed by this powerpoint-filler-ass graph fucking sent me. shit looks like a prescription sales rep left it on the wall of my doctor’s office so I don’t go for the generic refill

oh wow @self this comment got successfully emailed to me

nice! good to know lemmy’s email code isn’t a complete write-off. any warnings in your email client from my weird setup?

nope! though its subject lines etc could do with work. this is what I got:

“display images” did not display any images

huh, now I’m curious what images it thinks it wants to display

I am reminded of Tom Weller’s world-line diagram from Science Made Stupid.

Thanks for posting this link, it is hilarious.

For complete copies of both Science Made Stupid and the sequel Culture Made Stupid, see here.

I followed the link to the tweet thread where the graph was found and it’s not much better

https://threadreaderapp.com/thread/1666482929772666880.html

A concrete “easy scenario”: LLMs are just straightforwardly generative models over possible writers, and RLHF just selects within that space. We can then select for brilliant, knowledgeable, kind, thoughtful experts on any topic.

Like, wtf does this mean.

Another recent HN title

“LLMs are too easy to automatically red team into toxicity”

Again, wtf does this mean.

I’m increasingly wondering whether I am officially too fucking old for this shit, and my brain has lost the plasticity required to make sense of all this, or I’m just spending too much time on the wrong Internet.